Affiliate links on Android Authority may earn us a commission.Learn more.

On-device, power-efficient AI is the next tech frontier

July 30, 2025

Advances in the use of deep learning have enabled consumers to enjoy products like Google Assistant with high accuracy rates for voice input. However, all these advances have happened in the cloud, on big servers running in data centers.

It works like this: a user asks the digital assistant a question which is recorded and then pre-processed by the smartphone. The data is sent over the Internet to a server. The request is processed on the server and the result is sent back, over the Internet, to the device.

Until now it hasn’t been possible to do all the processing locally, on the device. However, things are changing. Now Machine Learning is starting to take place locally and not in the cloud.Mobile devices – such as laptops, tablets and smartphones – have one key difference from other types of computing devices: they run off a battery, making power efficiency essential and the choice of processor crucial.

Smartphones are designed to have power efficient CPUs and GPUs. But now a new class of technology is emerging, one that still demands power efficiency, namely on-deviceMachine Learning (ML) and Artificial Intelligence (AI).

To perform these Machine Learning tasks on the device, smartphones need a Machine Learning processor. Sometimes called Neural Processing Units (NPUs) or Neural Engines, these dedicated hardware platforms enable mobile devices to perform recognition tasks (inference) without using the cloud. This means smartphones can now do local Machine Learning tasks like image recognition or voice recognition without having to send data up to the cloud for some big server to process.

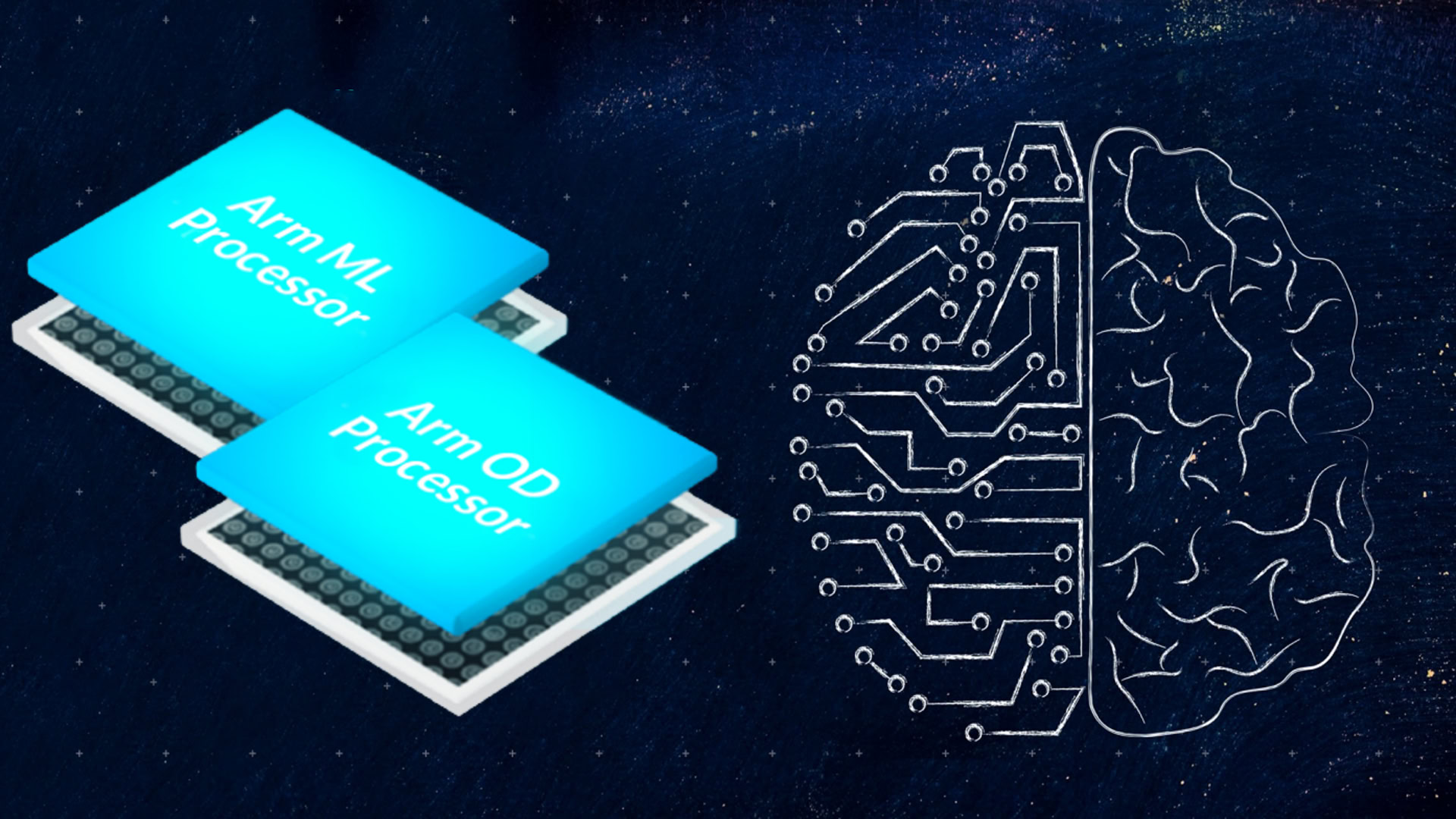

Arm, which designs power-efficient yet high-performance CPU cores as well as mobile GPUs, recentlyannounced a new Machine Learning platform codenamed Project Trillium. As part of Trillium, it announced the new Arm Machine Learning processor plus its second-generation Arm Object Detection (OD) processor.

The new ML processor is not an extension or modification of existing processor components, such as the CPU or GPU.It is designed from the ground upto offer large performance gains for on-device inference using pre-trained neural networks, all within the power efficiency constraints of mobile devices.

5 reasons why on-device AI is the next tech frontier

There are five important reasons why the mobile industry is focusing on this new technology.

It isn’t just about virtual assistants and clever photo apps

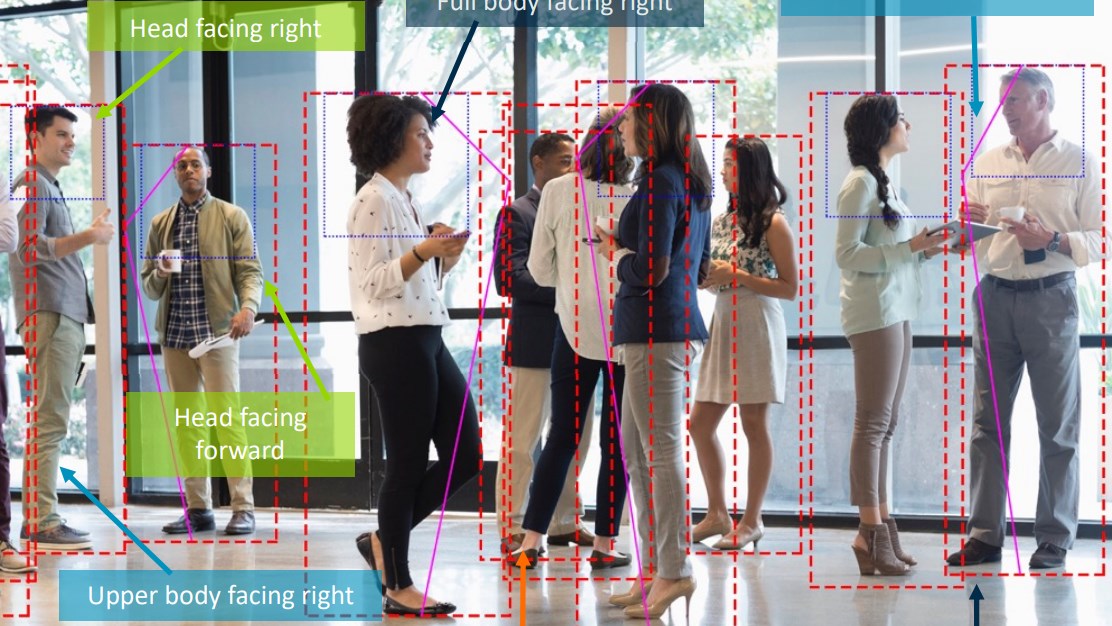

While the use of Machine Learning and Artificial Intelligence in virtual assistants and “AI-enabled” photography apps are highly visible to consumers today, the use of AI “at the edge” (on the device) extends far beyond those applications. Advances in computer vision will also be used in self-driving cars; on-device AI improvements will also impact digital surveillance cameras, smart home cameras, and industrial automation applications. The possibilities are endless.

IDC recently published a white paperidentifying the top ten trends among enabling technologies for 2018. According to the report, 2018 will be the year of AI at the edge, which includes Arm’s Project Trillium.

As we move into a new information age, where Machine Learning and Artificial Intelligence will play an important role, on-device inference with high performance and energy efficiency will become an enabling technology. Arm’s leadership in power efficient designs for high-perform mobile devices means it is positioned perfectly for this new frontier.

Promoted by Arm

Thank you for being part of our community. Read ourComment Policybefore posting.